How to Fine-Tune a 6 Billion Parameter LLM for Less Than $7

In part 4 of our Generative AI series, we share how to build a system for fine-tuning & serving LLMs in 40 minutes or less.

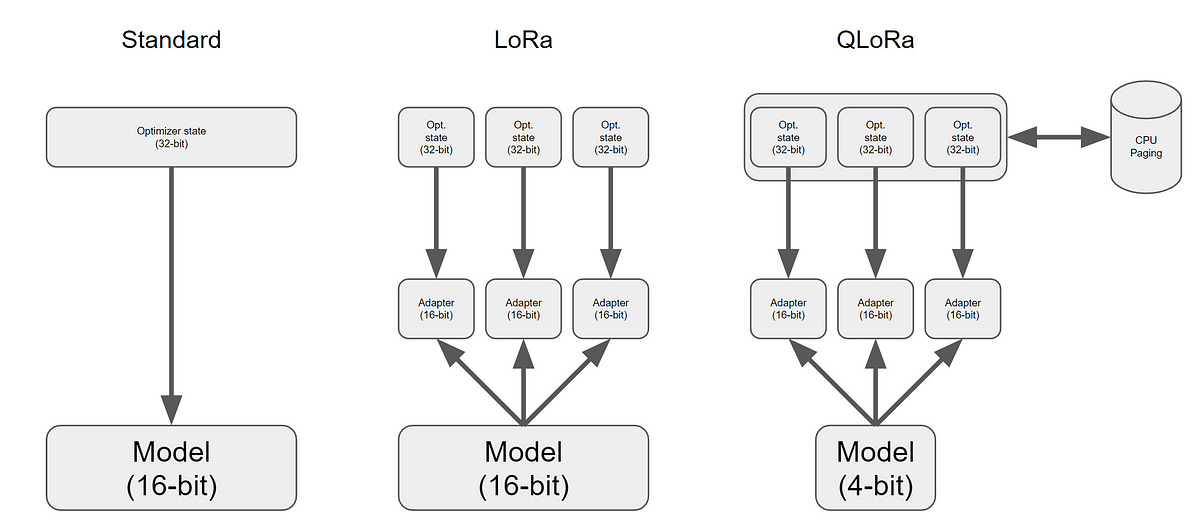

QLoRA: Fine-Tune a Large Language Model on Your GPU

Andrei-Alexandru Tulbure on LinkedIn: Google launches two new open

How to Adapt your LLM for Question Answering with Prompt-Tuning using NVIDIA NeMo and Weights & Biases

Jo Kristian Bergum on LinkedIn: The Mother of all Embedding Models

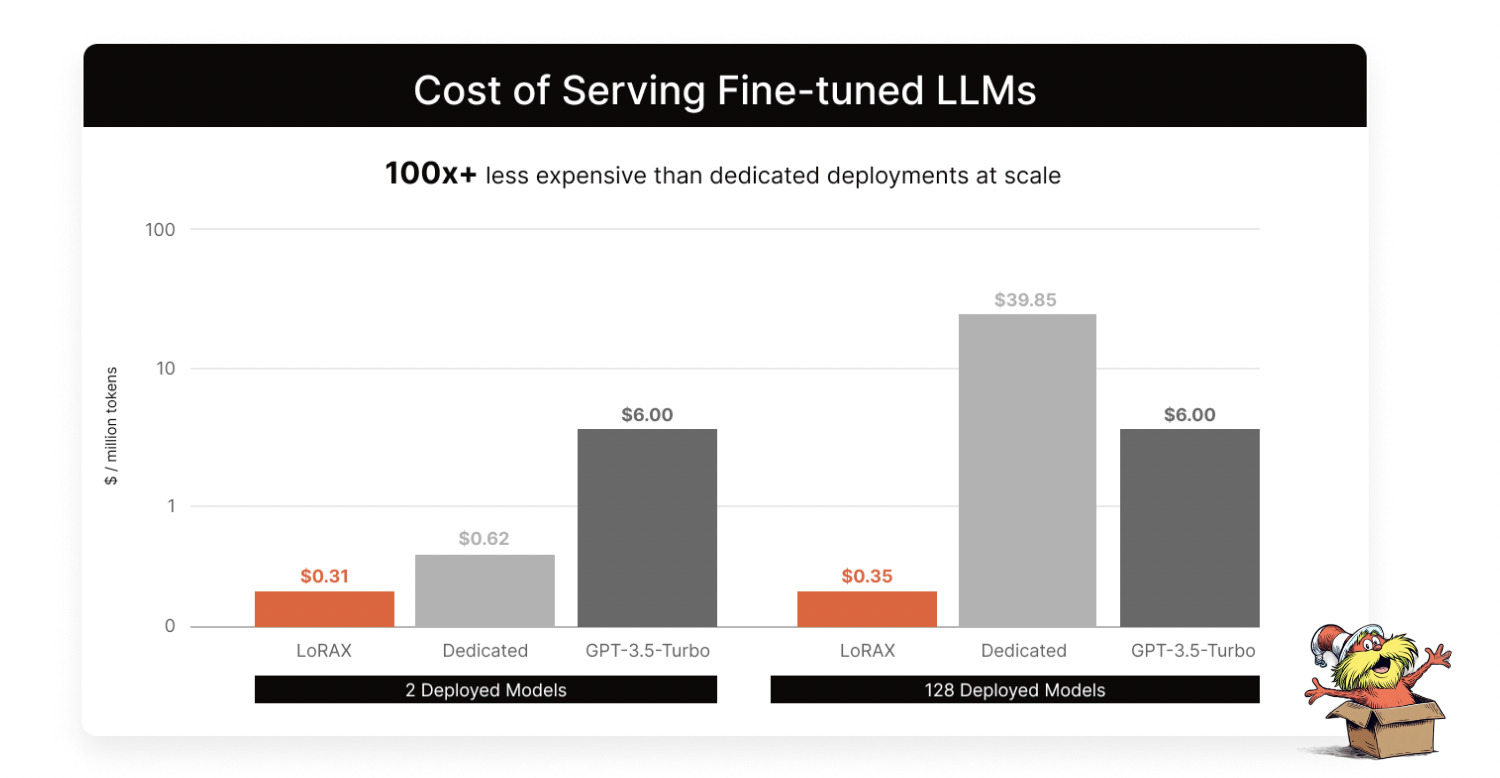

7 Things You Need to Know About Fine-tuning LLMs - Predibase - Predibase

CLIP by OpenAI: A game-changer in image-text understanding

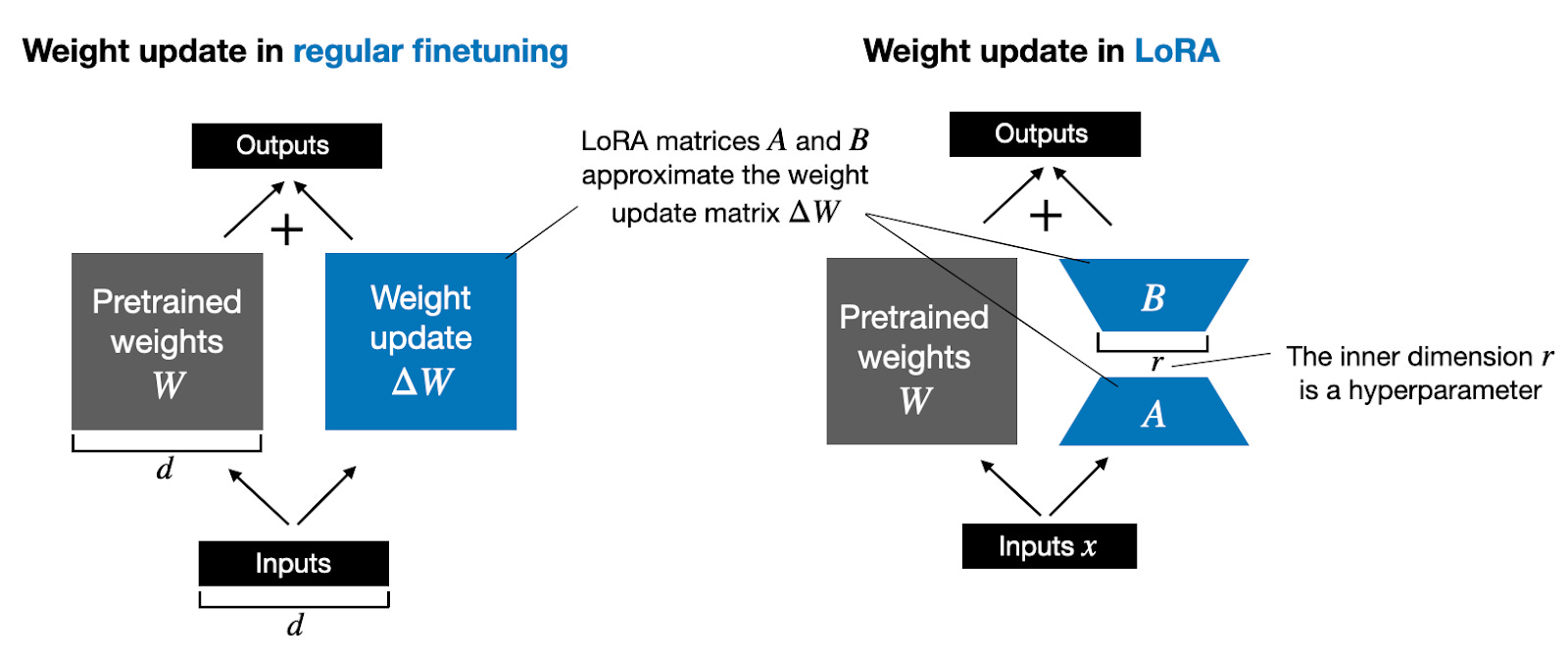

Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation)

Justin Zhao on LinkedIn: Ludwig v0.8: Open-source Toolkit to Build and Fine- tune Custom LLMs on…

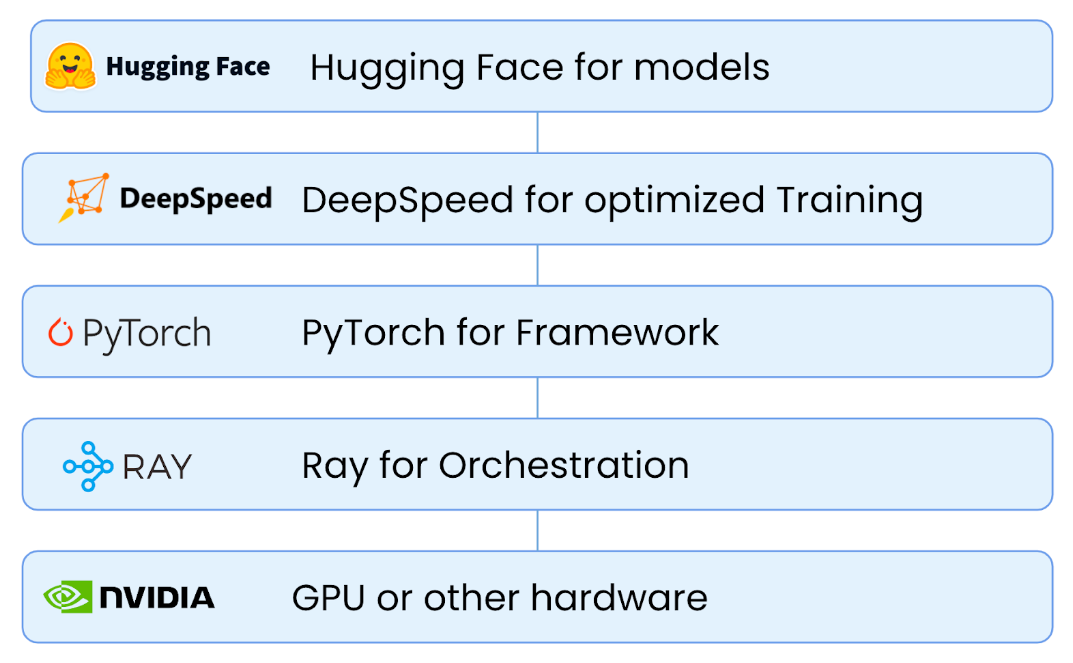

Finetune LLMs on your own consumer hardware using tools from PyTorch and Hugging Face ecosystem

What is low-rank adaptation (LoRA)? - TechTalks

Domain-adaptation Fine-tuning of Foundation Models in SageMaker JumpStart on Financial data

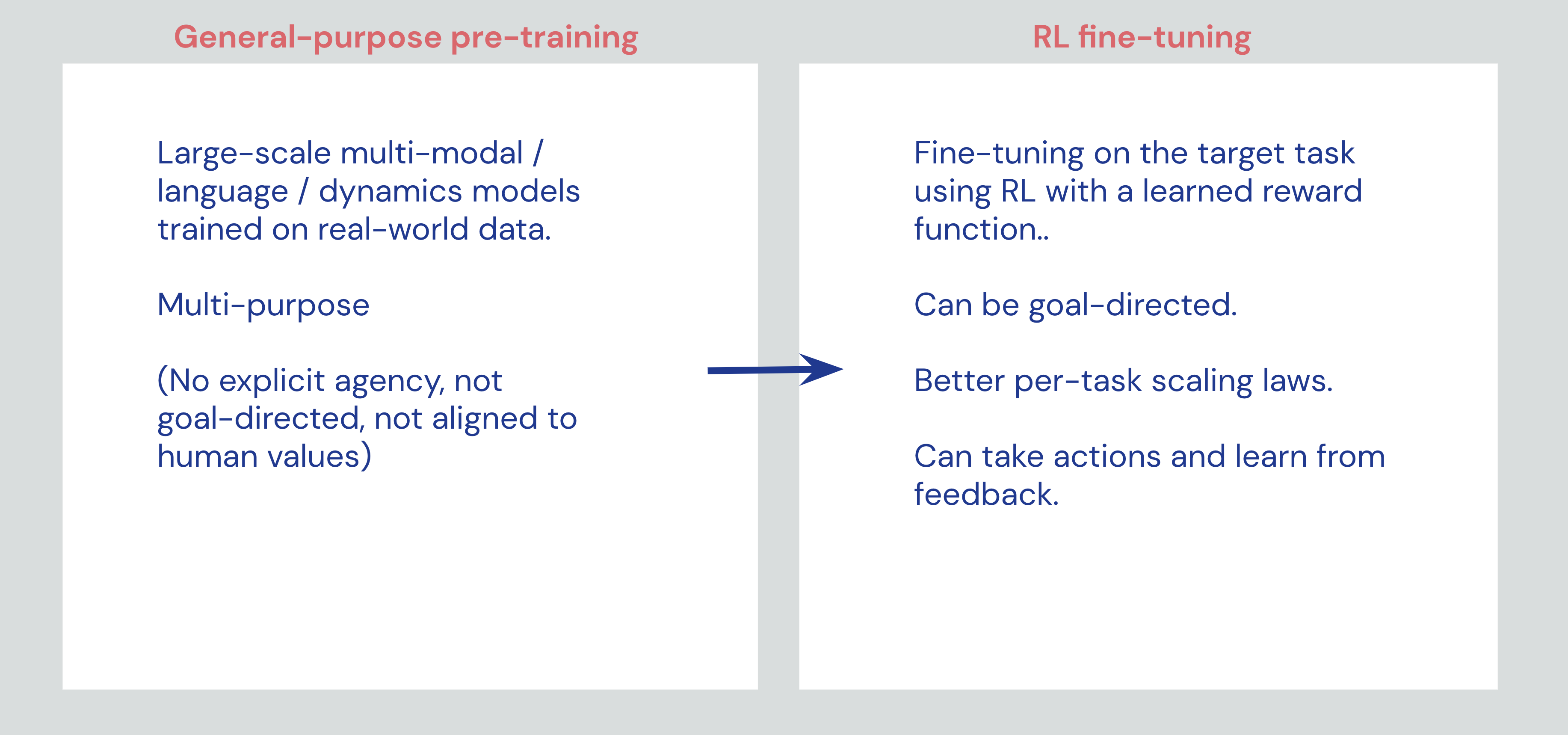

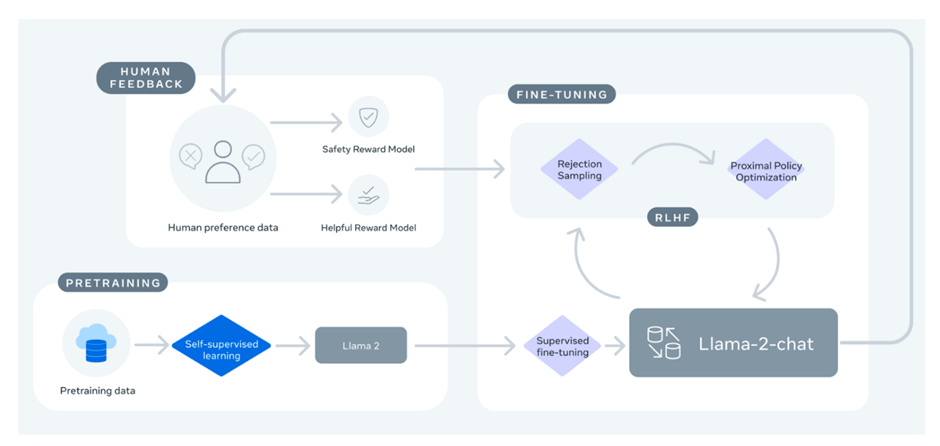

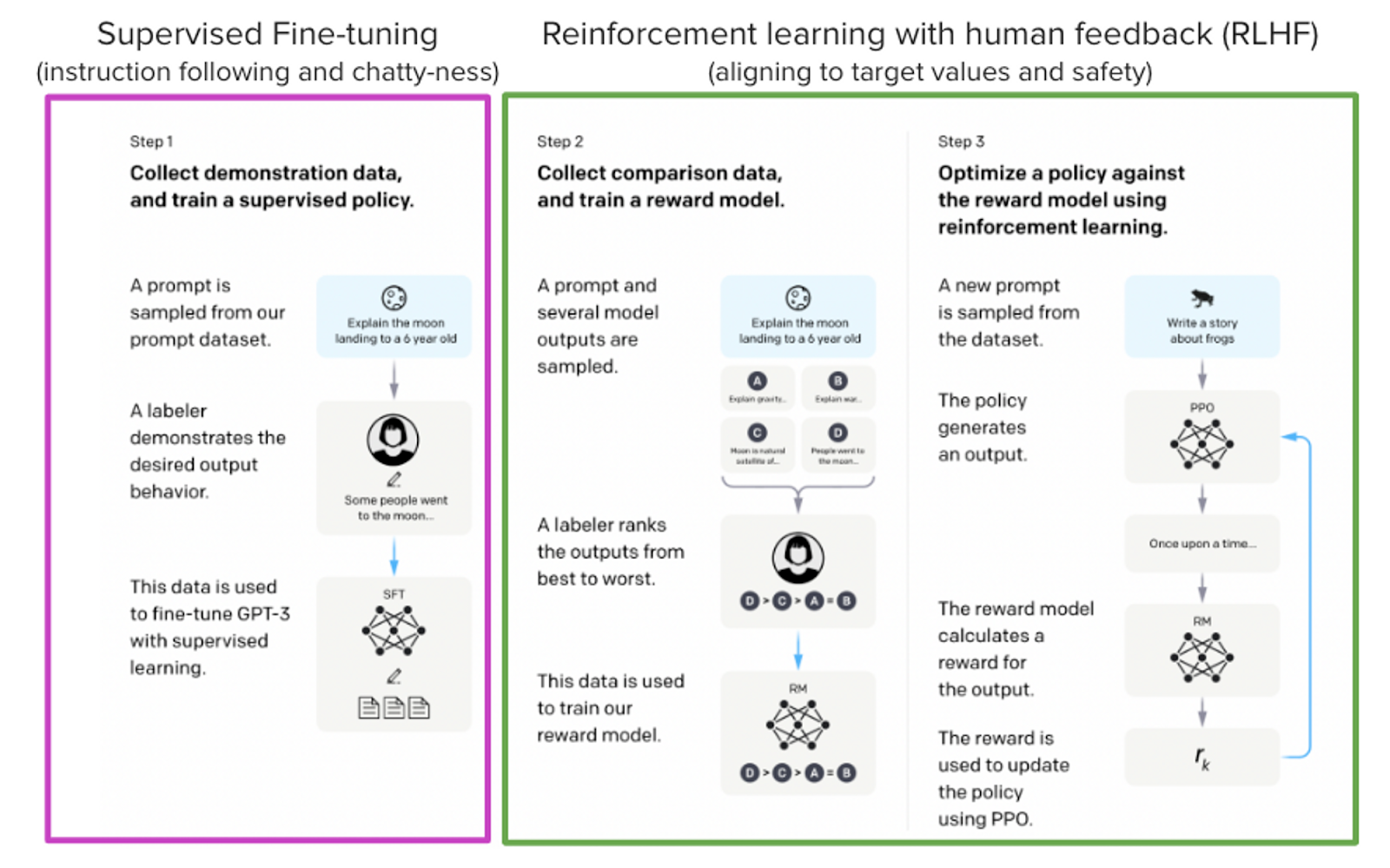

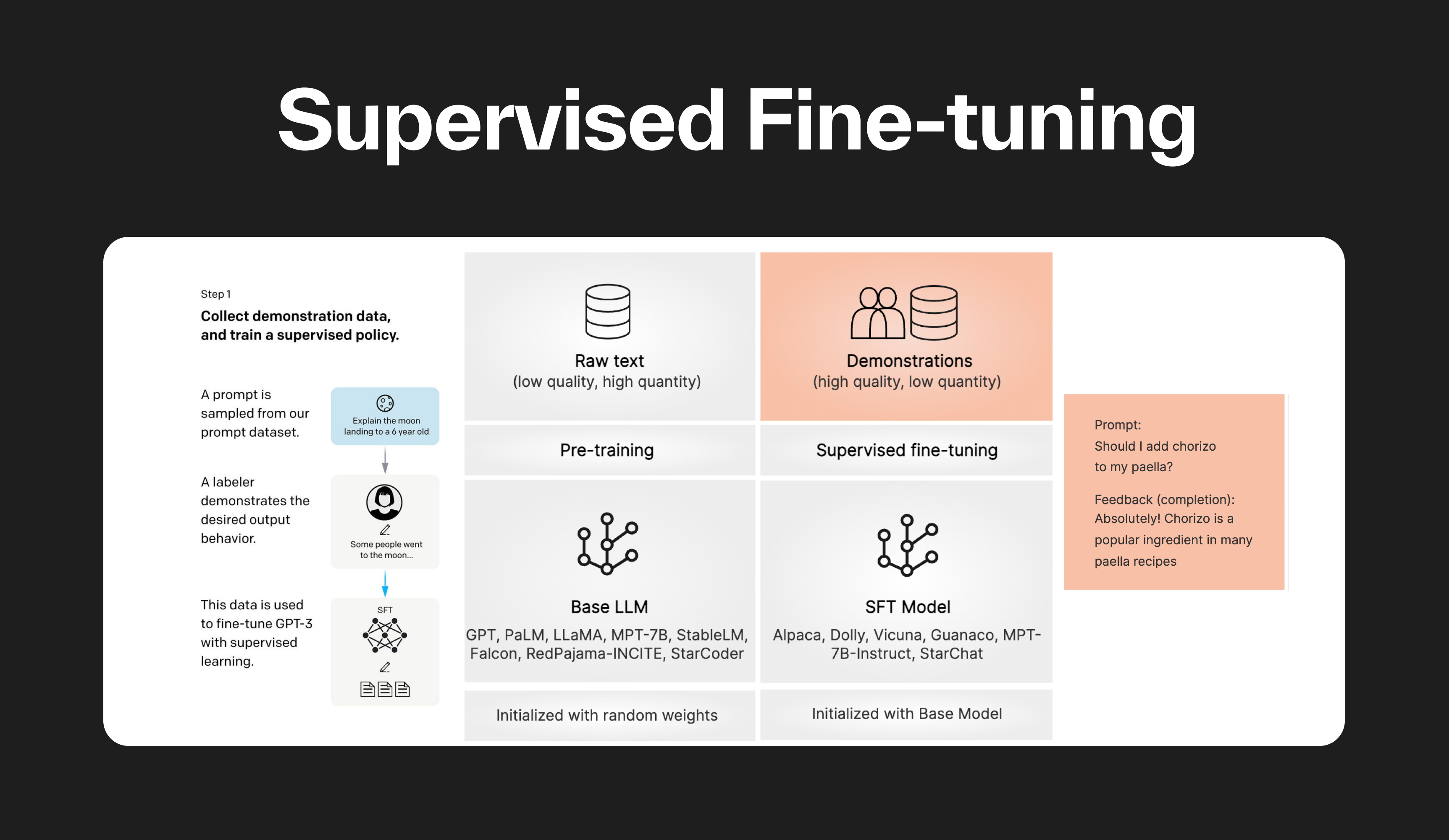

Fine-tuning methods of Large Language models

What is supervised fine-tuning? — Klu