Pre-training vs Fine-Tuning vs In-Context Learning of Large

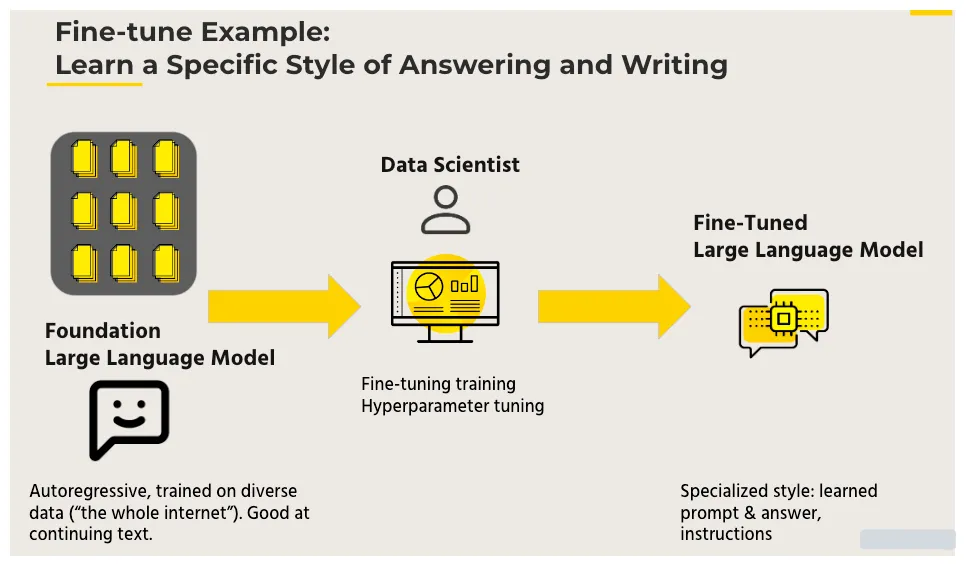

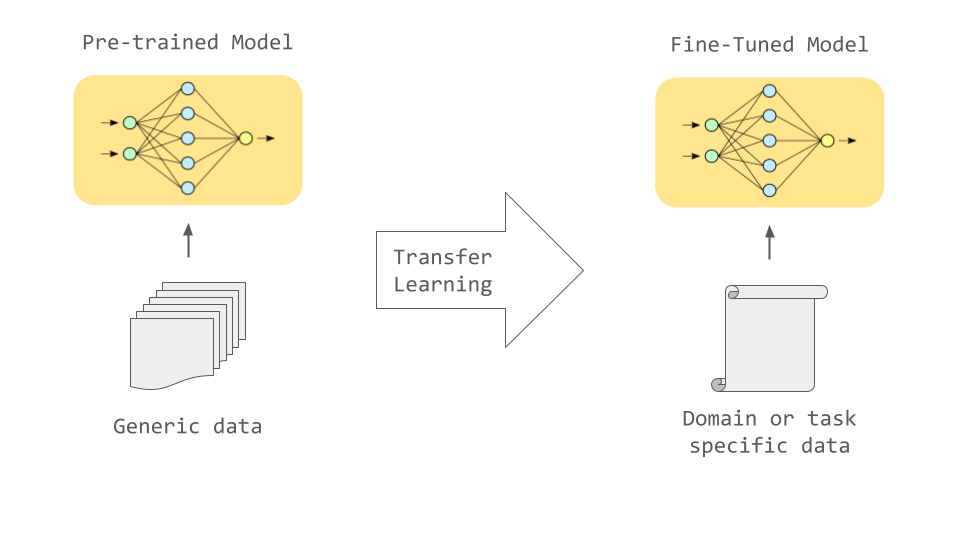

Large language models are first trained on massive text datasets in a process known as pre-training: gaining a solid grasp of grammar, facts, and reasoning. Next comes fine-tuning to specialize in particular tasks or domains. And let's not forget the one that makes prompt engineering possible: in-context learning, allowing models to adapt their responses on-the-fly based on the specific queries or prompts they are given.

Pre-training vs Fine-Tuning vs In-Context Learning of Large

Pre-training and fine-tuning process of the BERT Model.

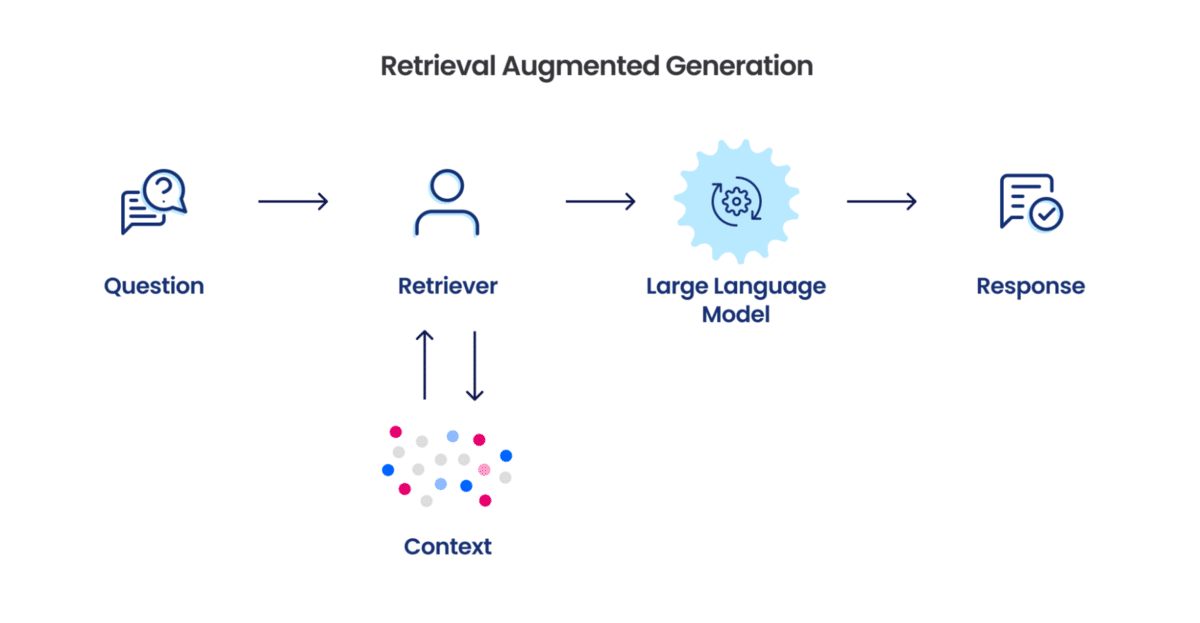

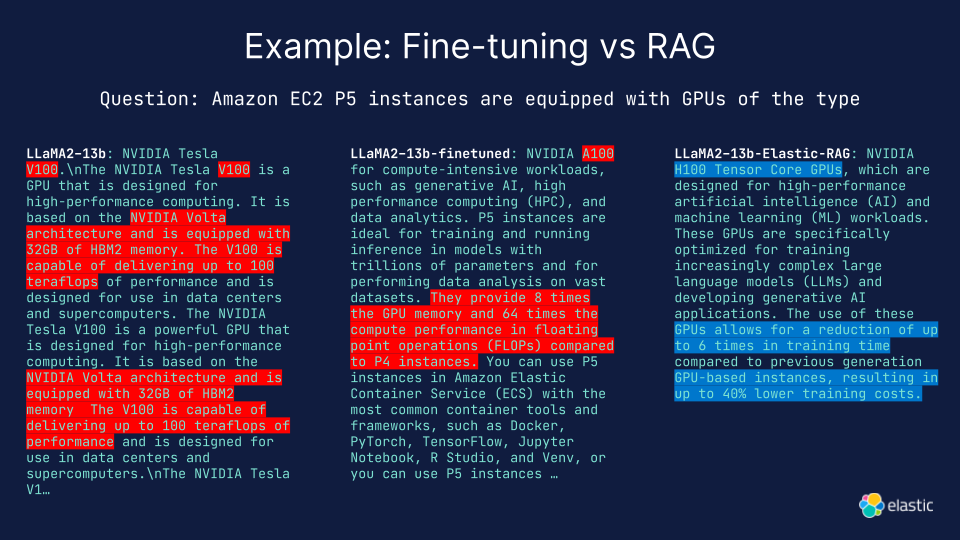

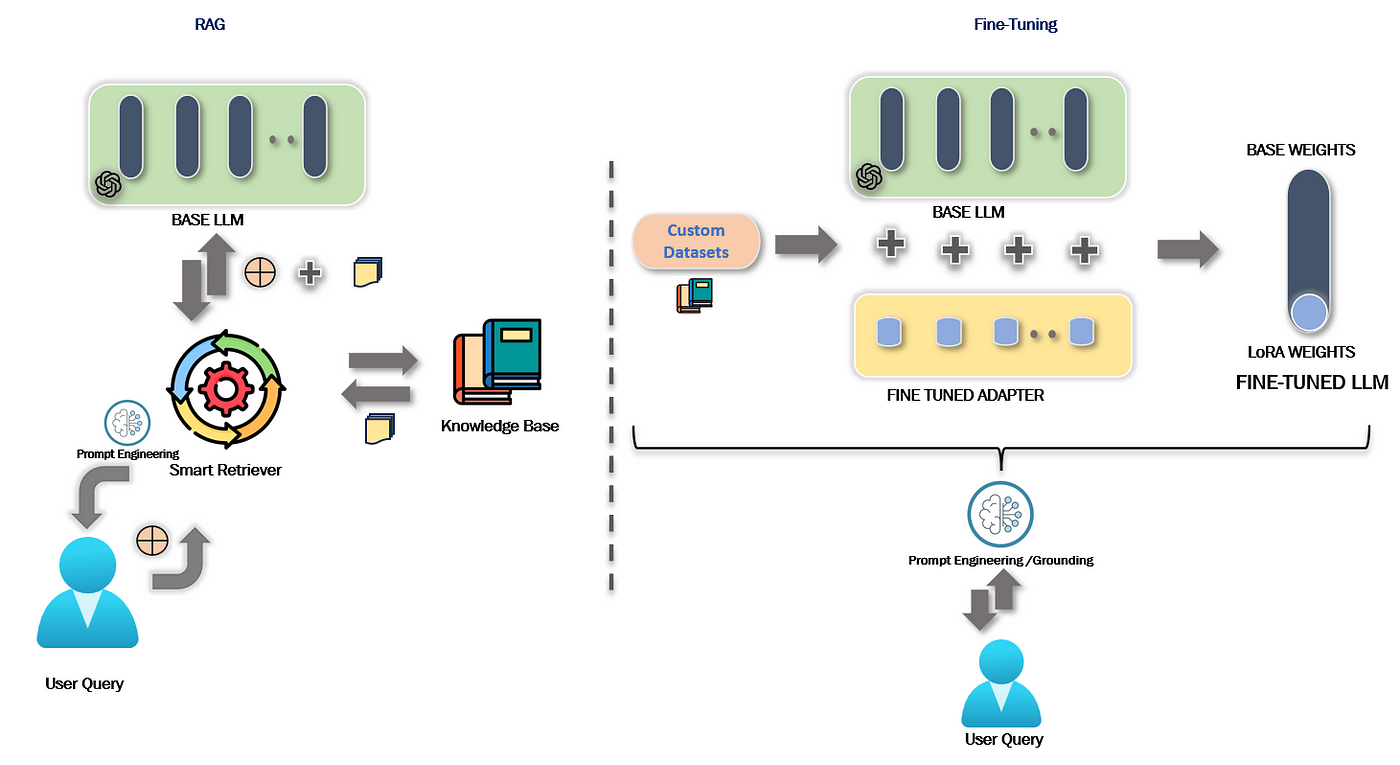

Which is better, retrieval augmentation (RAG) or fine-tuning? Both.

The overview of our pre-training and fine-tuning framework.

Everything You Need To Know About Fine Tuning of LLMs

Domain Specific Generative AI: Pre-Training, Fine-Tuning, and RAG — Elastic Search Labs

Articles Entry Point AI

Investigation of improving the pre-training and fine-tuning of BERT model for biomedical relation extraction, BMC Bioinformatics

Fine Tuning Open Source Large Language Models (PEFT QLoRA) on Azure Machine Learning, by Keshav Singh

Fine-Tuning Tutorial: Falcon-7b LLM To A General Purpose Chatbot

A Deep-Dive into Fine-Tuning of Large Language Models, by Pradeep Menon