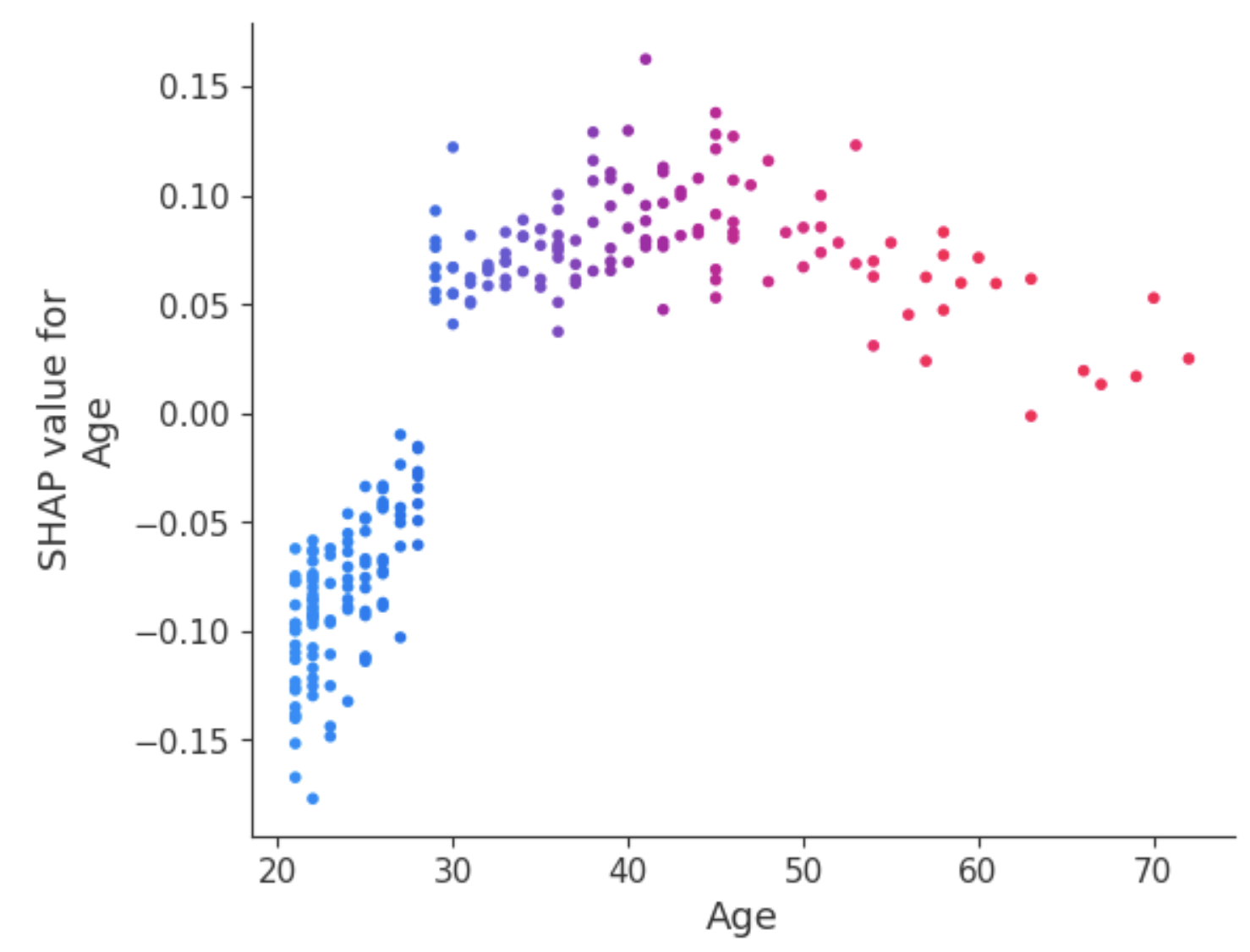

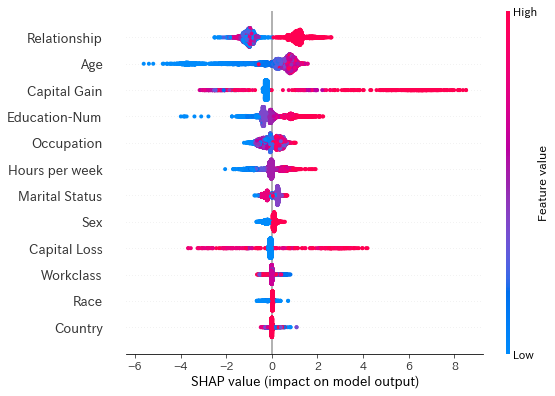

Feature importance based on SHAP-values. On the left side, the

By A Mystery Man Writer

SHapley Additive exPlanations (SHAP)

Shap - その他

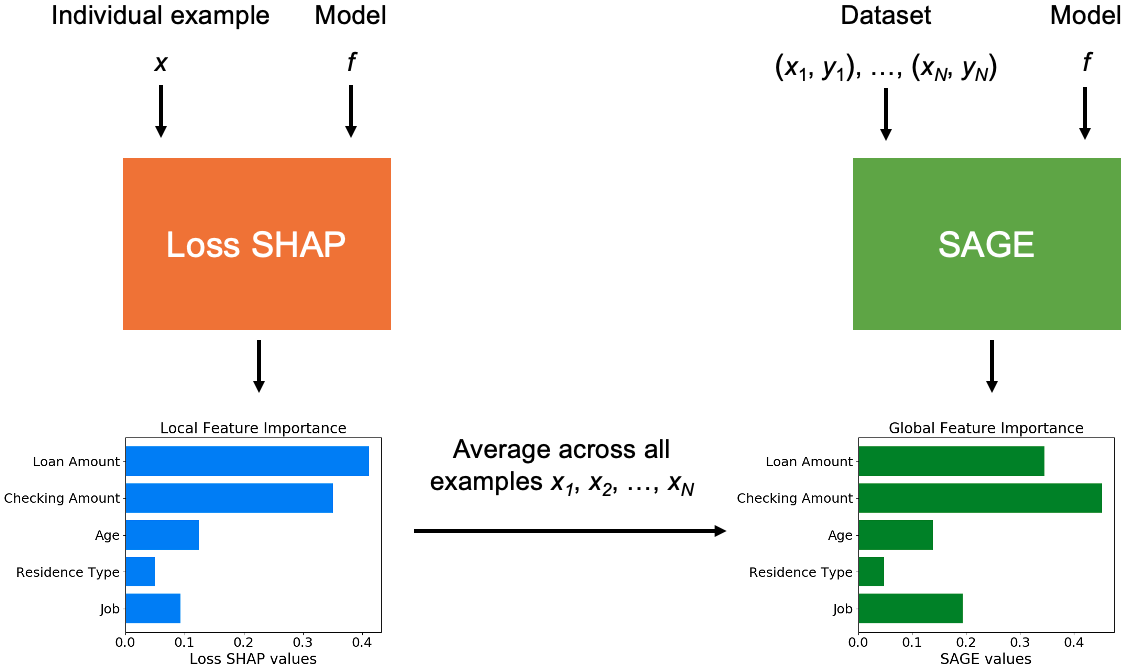

Explaining ML models with SHAP and SAGE

SHAP for Interpreting Tree-Based ML Models, by Jeff Marvel

Shap - その他

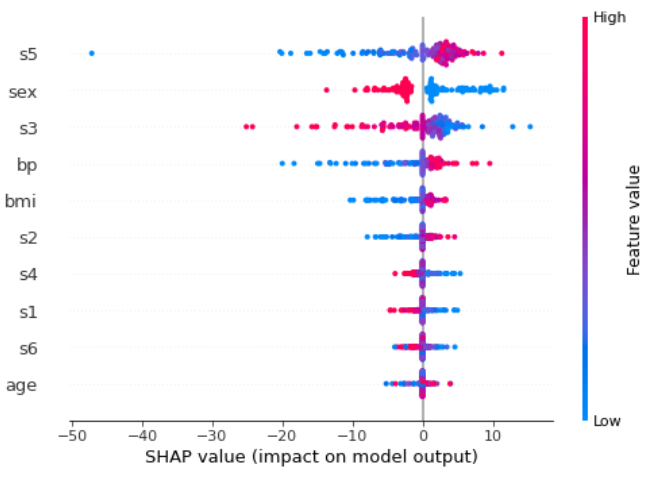

Feature importance based on SHAP values. On the left side, (a), the

python 3.x - Extract feature importance per class from SHAP summary plot from a multi-class problem - Stack Overflow

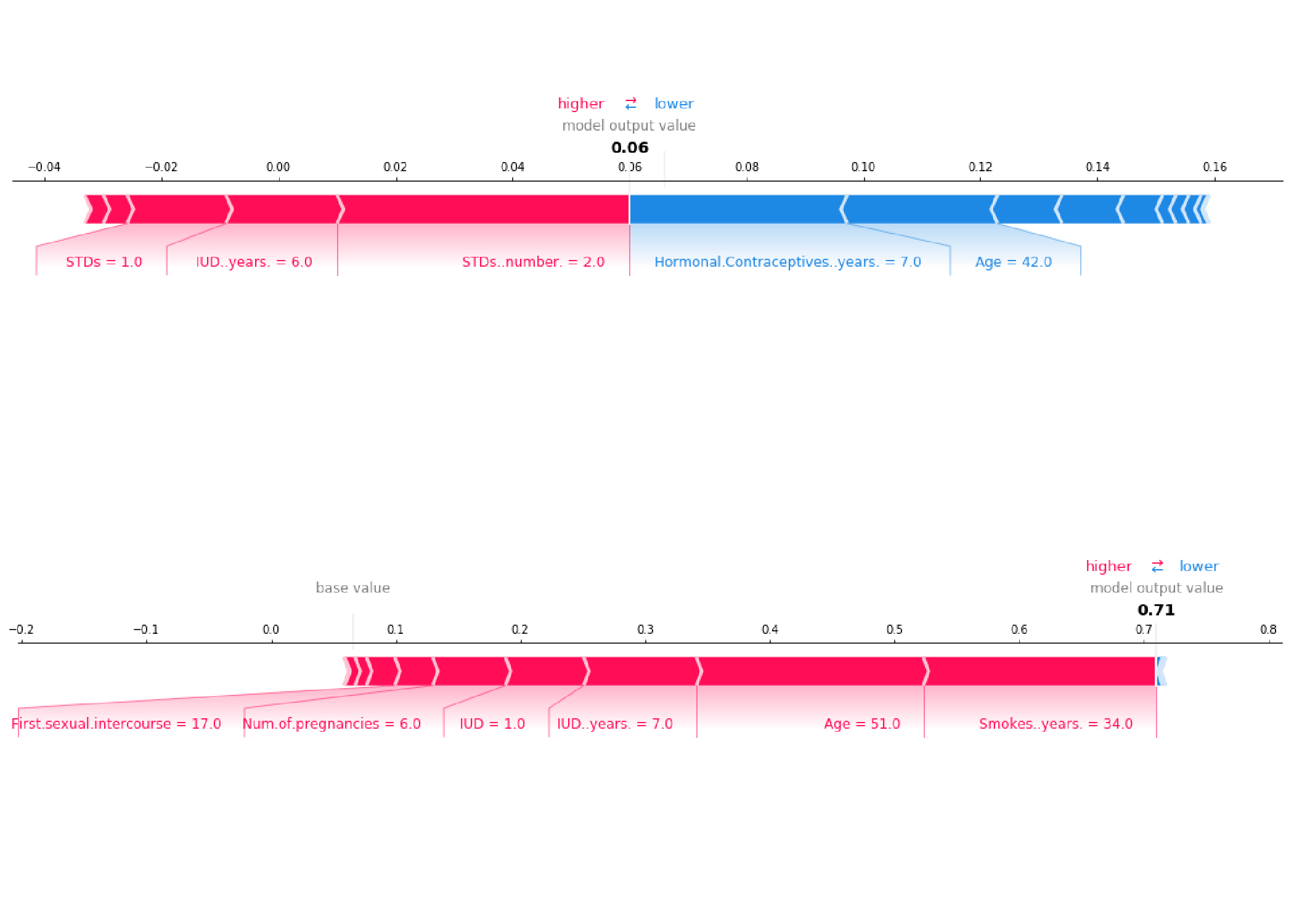

How to explain neural networks using SHAP

Variable importance plots using SHAP values from extreme gradient