DistributedDataParallel non-floating point dtype parameter with

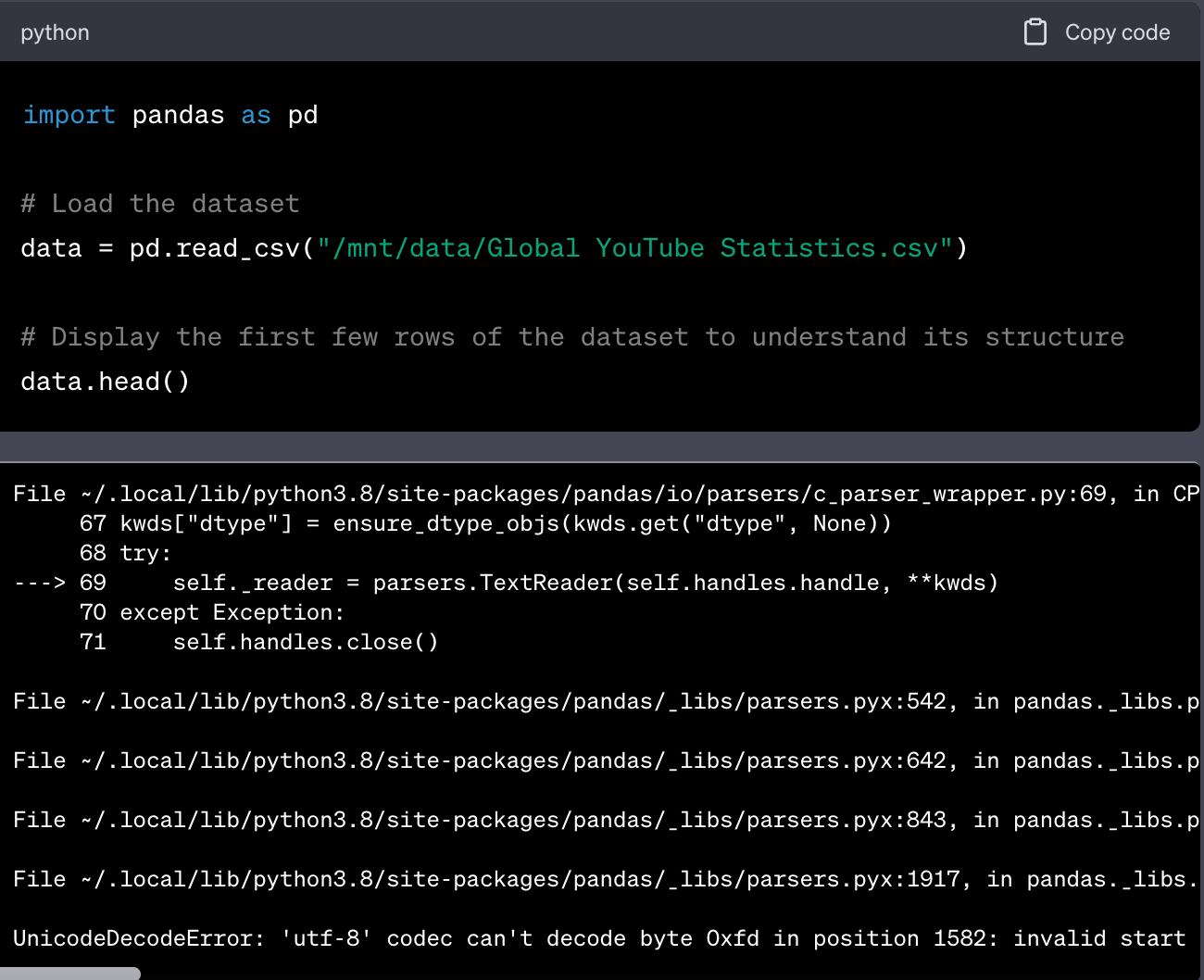

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

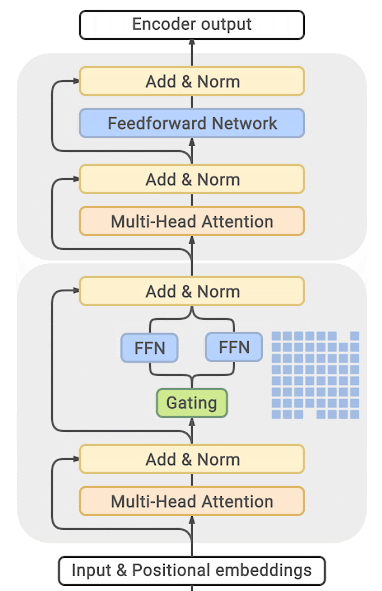

4. Memory and Compute Optimizations - Generative AI on AWS [Book]

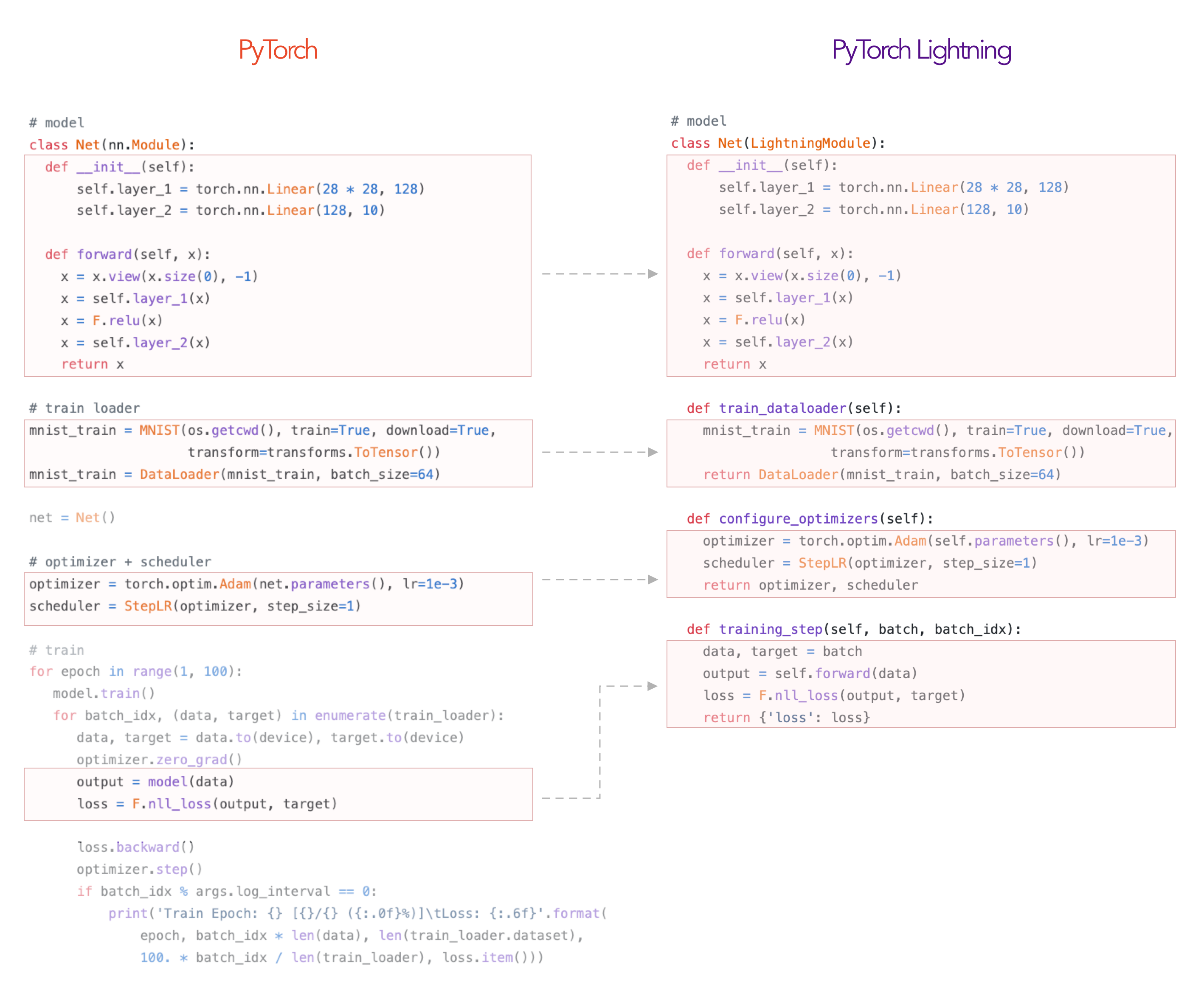

LightningModule — PyTorch-Lightning 0.7.6 documentation

Performance and Scalability: How To Fit a Bigger Model and Train It Faster

Number formats commonly used for DNN training and inference. Fixed

torch.nn、(一)_51CTO博客_torch.nn

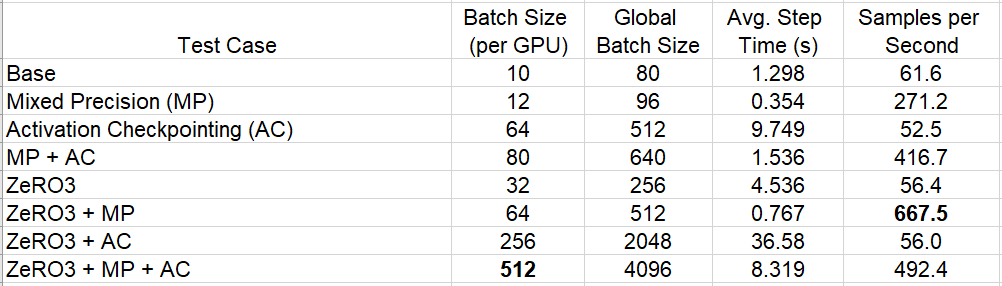

How to Increase Training Performance Through Memory Optimization, by Chaim Rand

4. Memory and Compute Optimizations - Generative AI on AWS [Book]

torch.nn、(一)_51CTO博客_torch.nn

images.contentstack.io/v3/assets/blt71da4c740e00fa