DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

Last month, the DeepSpeed Team announced ZeRO-Infinity, a step forward in training models with tens of trillions of parameters. In addition to creating optimizations for scale, our team strives to introduce features that also improve speed, cost, and usability. As the DeepSpeed optimization library evolves, we are listening to the growing DeepSpeed community to learn […]

SW/HW Co-optimization Strategy for LLMs — Part 2 (Software), by Liz Li

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

DeepSpeed/README.md at master · microsoft/DeepSpeed · GitHub

9 libraries for parallel & distributed training/inference of deep learning models, by ML Blogger

Revolutionizing Digital Pathology With the Power of Generative Artificial Intelligence and Foundation Models - Laboratory Investigation

.png)

Efficiently Training Transformers: A Comprehensive Guide to High-Performance NLP Models

PyTorch Conference: Full Schedule

Announcing the DeepSpeed4Science Initiative: Enabling large-scale scientific discovery through sophisticated AI system technologies - Microsoft Research

A Fascinating Prisoner's Exploring Different Approaches To, 44% OFF

DeepSpeed: Microsoft Research blog - Microsoft Research

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

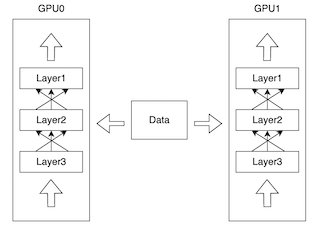

Parallel intelligent computing: development and challenges

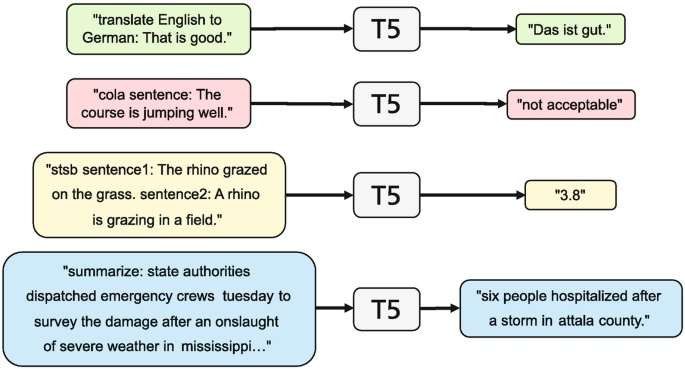

Improving Pre-trained Language Models

)