Two-Faced AI Language Models Learn to Hide Deception

(Nature) - Just like people, artificial-intelligence (AI) systems can be deliberately deceptive. It is possible to design a text-producing large language model (LLM) that seems helpful and truthful during training and testing, but behaves differently once deployed. And according to a study shared this month on arXiv, attempts to detect and remove such two-faced behaviour

ai startup llm hackathon

Alignment By Default — AI Alignment Forum

Why it's so hard to end homelessness in America. Source: The Harvard Gazette. Comment: Time for Ireland and especially our politicians, in this election year and taking note of the 100,000+ thousand

Biden Orders US Contractors to Reveal Salary Ranges in Job Ads : r/ChangingAmerica

Sensors, Free Full-Text

The scenario of successful Ethical Adversarial Attacks (EAA) on AI for

Study shows that large language models can strategically deceive users when under pressure

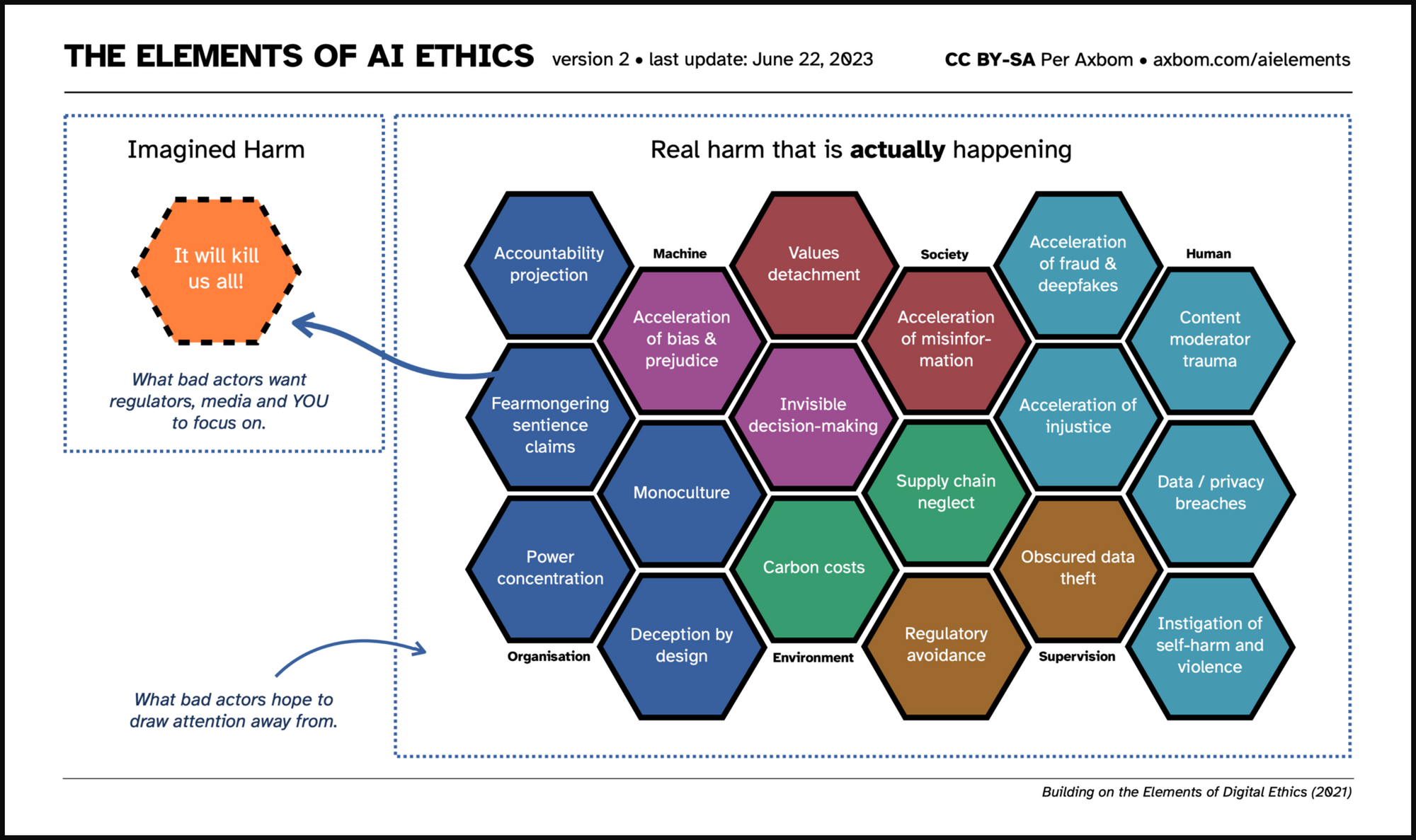

The Elements of AI Ethics

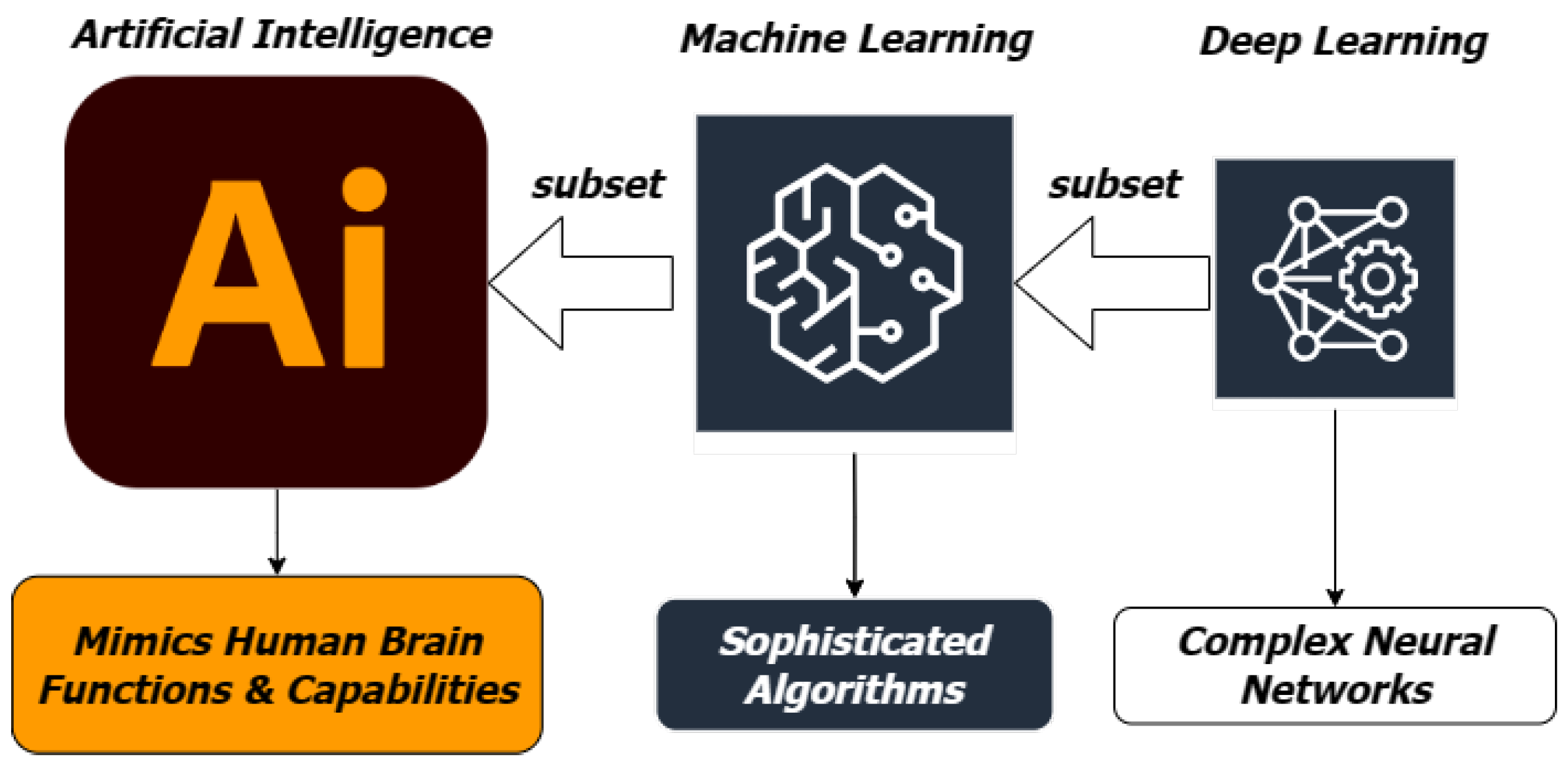

Machine learning for cognitive behavioral analysis: datasets, methods, paradigms, and research directions, Brain Informatics

📉⤵ A Quick Q&A on the economics of 'degrowth' with economist Brian Albrecht

Recent studies show deceptive complexities in AI behavior - Mugglehead Magazine